09 - Project 2

🧶 Project: Wool Defect Segmentation

1. Project Overview

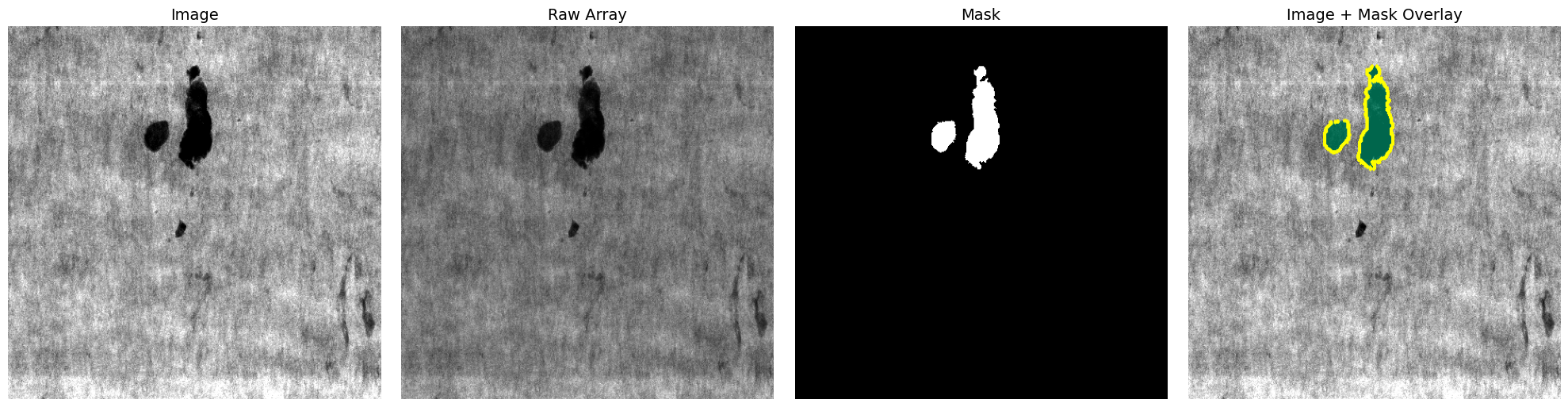

You will design, train, and evaluate a semantic segmentation system to detect defects in wool fabrics using X-ray data. The goal is a pixel-level mask that highlights defects (“chewing gum”). Use the provided aligned images, masks, and raw arrays as your starting point; extend with stronger models, augmentations, and clear evaluation.

2. The Dataset

The dataset provides a raw output of X-ray with wool surface

(raw/) in form of float array with intensity values. Folder

masks/ contains binary segmentation masks of defects

(“chewing gums”) occurring in raw product. In segmentation mask defect

is represented as value 255 (white object), while

background is represented by value 0 (black color). To

simplify initial processing and visual perception. Raw arrays from X-ray

were converted to images in uint8 format. Hence, each

sample contains:

- Raw intensity array (raw X-ray data)

- RGB or grayscale image of fabric (converted from raw X-ray arrays)

- Binary defect mask

Dataset source: https://chmura.put.poznan.pl/s/7MttNHYPTkiXGcs

Dataset password is available on eKursy platform in Project 2 section

Dataset structure:

wool_defects_segmentation_dataset/

├── train/

│ ├── images/

│ │ ├── 0016daf8-76cf-49f6-978c-dca4e3001254.png

│ │ ├── 01fb9d4d-146f-492c-bbf8-a3db005464e2.png

│ │ ├── 04a9ee47-3835-4ef3-b11c-6580f222b737.png

│ │ └── ... (120 total images)

│ ├── masks/

│ │ ├── 0016daf8-76cf-49f6-978c-dca4e3001254_mask.png

│ │ ├── 01fb9d4d-146f-492c-bbf8-a3db005464e2_mask.png

│ │ ├── 04a9ee47-3835-4ef3-b11c-6580f222b737_mask.png

│ │ └── ... (120 total masks)

│ └── raw/

│ ├── 0016daf8-76cf-49f6-978c-dca4e3001254_raw.npy

│ ├── 01fb9d4d-146f-492c-bbf8-a3db005464e2_raw.npy

│ ├── 04a9ee47-3835-4ef3-b11c-6580f222b737_raw.npy

│ └── ... (120 total raw arrays)

└── test/

├── images/

│ ├── dcb98c1a-c058-4a97-a0a4-e212d241e973.png

│ ├── 15b0f237-c29a-4b3b-9163-719db79710fa.png

│ ├── fbb08b85-35fa-4cbb-b789-5c4a73ceeff2.png

│ └── ... (50 total images)

└── raw/

├── dcb98c1a-c058-4a97-a0a4-e212d241e973_raw.npy

├── 15b0f237-c29a-4b3b-9163-719db79710fa_raw.npy

├── fbb08b85-35fa-4cbb-b789-5c4a73ceeff2_raw.npy

└── ... (50 total raw arrays)Data Note: Each UUID has three corresponding files:

an image ({uuid}.png), a mask

({uuid}_mask.png), and a raw X-ray array

({uuid}_raw.npy). All files are aligned and share the same

spatial dimensions.

Sample data from dataset

3. Technical Objectives

A. Data Pre-processing & Augmentation

- Decide which data type you want to use as model input: raw array or generated images.

- Preserve aspect ratio and keep 448x448 mask resolution.

- Normalize per-channel mean/std.

- Apply data augmentation on training splits. Apply identical geometric transforms to images and masks; avoid intensity-only transforms on masks.

Note: The output mask size have to be 448x448. However, you can downscale or crop the mask into segments for model implementation, then upscale or combine the outputs to generate the final mask.

B. Segmentation Architecture

Choose segmentation architecture and justify it. You can also use one of the following examples:

- Custom encoder-decoder (e.g., U-Net / U-Net++ with ResNet34/50 or EfficientNet-B3 encoder)

- DeepLabV3+ with a lightweight encoder (ResNet50/MobileNetV3) for thin structures

- Transformer-based (e.g., Swin-UNet or SegFormer-B0/B1) for long-range context

C. Training Loop & Evaluation

- Loss: BCE / Dice / Tversky / Focal or their combination.

- Batch size: fit to GPU (e.g., 4–32 at 448x448); use mixed precision if available.

- Epochs: ~200-300 with early stopping on val IoU/F1-Score (patience ~20).

- Metrics: IoU, F1-Score, precision/recall.

D. Inference & Post-processing

- Filtering: remove tiny blobs by area.

- Post-processing: fill small holes and optionally apply morphological closing for smoother masks.

4. Deliverables

Short Report

A short report should not exceed 2-3 pages and should include:

- Data preprocessing and normalization choices explaining pre- and post-processing methodology

- Model architecture, loss, optimizer, and hyperparameters

- Learning curves and validation metrics (e.g. IoU, F1-Score, precision/recall)

- Qualitative panel: image, ground-truth mask, prediction, overlay with contours

- Ablations (e.g., input size, input format, model architecture, model encoder, loss variants)

Test Set Results

Compressed folder (submission.zip) with predicted

segmentation masks for the test set (PNG format). Segmentation masks

should comply with the following naming convention:

{uuid}_mask.png.

Model will be evaluated based on F1-Score metric (implemented in torchmetrics library) calculated between predicted segmentation masks and ground truth masks.

Source Code

Export source code to the compressed folder

(source_code.zip). It should include:

- Training code, inference script, and config (paths, hyperparameters, augmentations)

- Best model checkpoint

Acknowledgment

We thank Rockwool for providing the wool defect dataset with X-ray imagery and pixel-level segmentation annotations. This resource enables development of robust automated defect detection systems for industrial quality control applications.